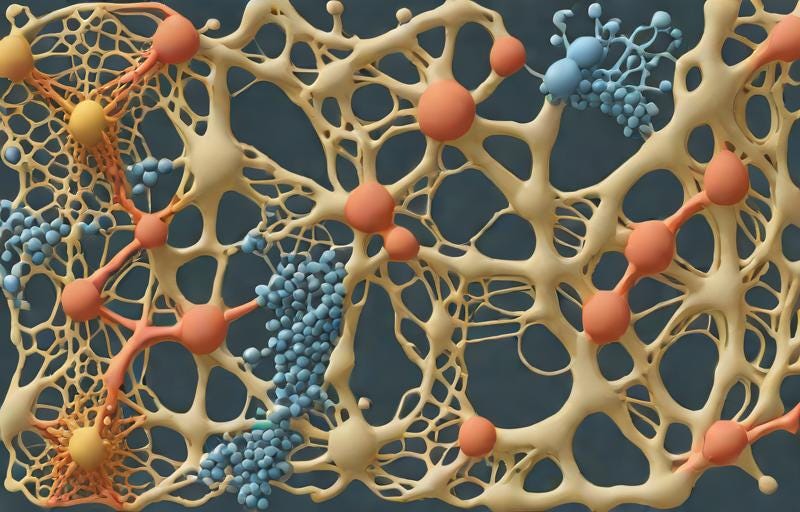

DeepMind Takes on Protein Folding

Done with games, the famous AI company is now taking on biology. But is it a breakthrough, or more hype?

Hi everyone,

Thank you for your continued support! It has been truly wonderful.

I’ve been thinking about the problem of protein folding and so decided to tackle it here. I hope it’s useful to your understanding, if not of protein folding, of the current efforts to use deep neural networks to “crack the code.” I hope you enjoy.

Keep reading with a 7-day free trial

Subscribe to Colligo to keep reading this post and get 7 days of free access to the full post archives.