Extinct: How Humanity's Worst Traits Are Driving Species (And Us) Toward Oblivion

From douchebags to doomsdayers, a field guide to the human subtypes wiping out animals—and threatening ourselves.

Hi everyone,

Lately, I’ve been exploring the concept of species extinction and, through some curious connections, I’ve arrived at an unexpected insight: the extinction of animals and the persistence of certain human subtypes might just be linked. From douchebags to jerks, assholes, creeps, pricks and others—these characters seem to thrive, while the animal kingdom steadily declines. I stumbled upon this initially uncovering some research highly suggestive of an inverse correlation between the number of assholes and a steady decline in carrier pigeons. My next step was to look for a mechanism; a causal connection. Let’s proceed.

In what follows, I’ll present my research on these colorful human subtypes—who they are, how they function, and why they persist against all odds. I encourage you to think of these human subtypes as akin to species, as it will help illuminate the peculiar way in which they evolve, thrive, and often outlast other forms of life. I have some surprising connections to report here, and I’m excited to be getting this out for perusal.

I’ll also address a growing concern over the possible extinction of the human race itself. This fear is most commonly expressed by what I like to call Existential Worriers—a group marked by their delusion psychology, specifically in relation to unknowable future outcomes. These folks are expert in magical thinking and far-too-confident predictions about probabilities, like humanity’s inevitable doom. Spoiler: they’re usually wrong, but don’t tell them that.

So, consider this “The Prolegomena to Vanishing Species”—a guide to map out the human terrain before we dive into notable extinction cases. And if any of this sounds confusing—don’t worry. You’re probably just not that smart.

Prolegomena to Vanishing Species: A Field Guide to Human Subspecies

Or, Why We Need Taxonomy for People Too.

Before we dive into the sad, hilarious, and totally avoidable stories of extinction, we need to address the very human categories that roam among us. Just like animals, not all humans are created equal—some are jerks, some are pricks, and others? Well, let’s just say evolution didn’t do them any favors. For your education (and amusement), here’s a quick and dirty guide to the finer points of human subspecies classification.

1. Douchebag

The Corporate Tool Who Thinks He’s Better Than You.

Genetics: If a protein shake married a LinkedIn influencer and had a baby with a Bluetooth headset.

A douchebag is that guy in the office who drinks protein shakes at 10 AM, calls every meeting “a huddle,” and uses phrases like “let’s circle back” as if they contain real meaning. Think Bill Lumbergh from Office Space—he’s always the one you have to deal with, but he thinks he’s the one making the tough calls. He’s not particularly malicious, but the overwhelming smugness? That’s his defining trait.

Species Marker: The Bluetooth headset that he never takes off, even when he's in a quiet room.

Extinction Status: Sadly, not extinct. They thrive in open office spaces and LinkedIn posts.

Example: The guy who tells you about his CrossFit PR when you’re just trying to enjoy your lunch.

2. Jerk

The Low-Level Offender Who Thrives on Minor Annoyance.

Genetics: If a mosquito married a bad Yelp review and had a baby that always cuts in line.

Jerks are the gnats of the human world—small, irritating, and everywhere. They don’t ruin your life, but they sure make it harder to enjoy a happy hour in peace. A jerk is the person who cuts in line at Starbucks and orders the most complicated drink ever, or the guy who parks so close to your car you can’t open the door. Think Chet from Weird Science. He’s not out to destroy your world, but he sure enjoys watching you squirm.

Species Marker: Cars with bumper stickers ….

Extinction Status: Jerks? Ubiquitous. They are, tragically, highly adaptable to every social environment.

Example: The dude who calls your favorite band “overrated” and can’t let it go.

3. Prick

The Rich-Kid, Entitled Version of the Jerk.

Genetics: If a country club locker room married a black credit card and had a baby that never tips.

A prick is what happens when you take a jerk, add a trust fund, and give him a Porsche at 16. He’s the guy who knows the rules but considers himself exempt because, well, his last name’s on a building somewhere. Hardy Jenns from Some Kind of Wonderful is the quintessential prick—an asshole with a platinum card and zero self-awareness.

Species Marker: Designer sunglasses, worn indoors.

Extinction Status: Never. Pricks thrive in every tax bracket but really come into their own with wealth.

Example: The guy who tells the bartender, “Do you know who I am?” while trying to skip the line.

4. Asshole

A Jerk Who’s Found Success—And Now He’s Dangerous.

Genetics: If Napoleon married Gordon Gekko and had a baby with a personalized license plate that reads “WINNER.”

An asshole is a jerk with a corner office. Once a jerk gets a little power—whether that’s in business, life, or the neighborhood HOA—he graduates to asshole status. Colonel Nathan R. Jessup from A Few Good Men is a prime example. He’s got power, he knows it, and he’s going to make sure you know it too. Assholes live for the moment they can make someone else feel small.

Species Marker: Expensive watch and an even more expensive opinion about everything.

Extinction Status: Thriving. Assholes evolve quickly and dominate corporate and political environments.

Example: The guy who belittles the waiter for bringing “sparkling water” when he clearly asked for “still.”

5. Tool

The Guy Who Lives by the Rules, and Will Make You Do the Same.

Genetics: If an Excel spreadsheet married a DMV employee and had a baby with a clipboard obsession.

A tool is a specific breed—a cross between a douchebag and a bureaucrat. He’s the guy who makes sure everyone follows the rules, not because they’re right, but because he’s empowered by enforcing them. Think Guy Pearce’s character in L.A. Confidential—all by-the-book, no room for deviation, and absolutely no fun.

Species Marker: Clipboards, spreadsheets, and a smug sense of duty.

Extinction Status: You can’t escape them. Tools are immortal.

Example: The guy in the group project who reminds the teacher that homework was due.

6. The Creep

Slippery and Socially Unaware—You Definitely Don’t Want to Meet One.

Genetics: If a fogged-up window married the "block user" button and had a baby with no sense of personal space.

Creeps are their own special brand of off-putting. They hover at the edge of the party, slightly too close, but never really engage. They’re always there, but never in a way you can quite explain. Think of Newman from Seinfeld—constantly lurking, vaguely unsettling. Creeps are social predators, not because they’re dangerous, but because they’re just so weird about it.

Species Marker: Unwashed jeans, unnecessary lingering.

Extinction Status: Persistent, like the smell of mildew. They seem to thrive in basements and comic conventions.

Example: The guy who won’t stop staring but says, “I was just looking at the wall behind you.”

7. The Tyrant

Assholes with Power: Run When They’re in Charge.

Genetics: If Napoleon married Miranda Priestly from The Devil Wears Prada and had a baby with a sword.

Give an asshole some power, and you’ve got yourself a Tyrant. They’ll micromanage, control, and generally make sure your life is a misery, all while staying completely oblivious to their destructive behavior.

Species Marker: The clipboard that’s never far from their grasp, no matter the situation.

Extinction Status: Thriving in corporate and bureaucratic environments where power goes unchecked.

Example: The boss who schedules 6 AM meetings just to prove a point.

9. The Perv

Always Creepy, Sometimes Sleazy, and Never Welcome.

Genetics: If Pepe Le Pew married Patrick Bateman from American Psycho and had a baby that got kicked out of every club.

A perv is marked by an undeniable creepiness, and unlike a sleaze, they rarely bother with charm. They push boundaries and ignore social cues, leaving everyone around them cringing.

Species Marker: The greasy grin that gives you instant regret for making eye contact.

Extinction Status: Sadly persistent, lurking in dark corners of both real and digital spaces.

Example: The guy who “accidentally” brushes up against you in a crowded elevator and grins.

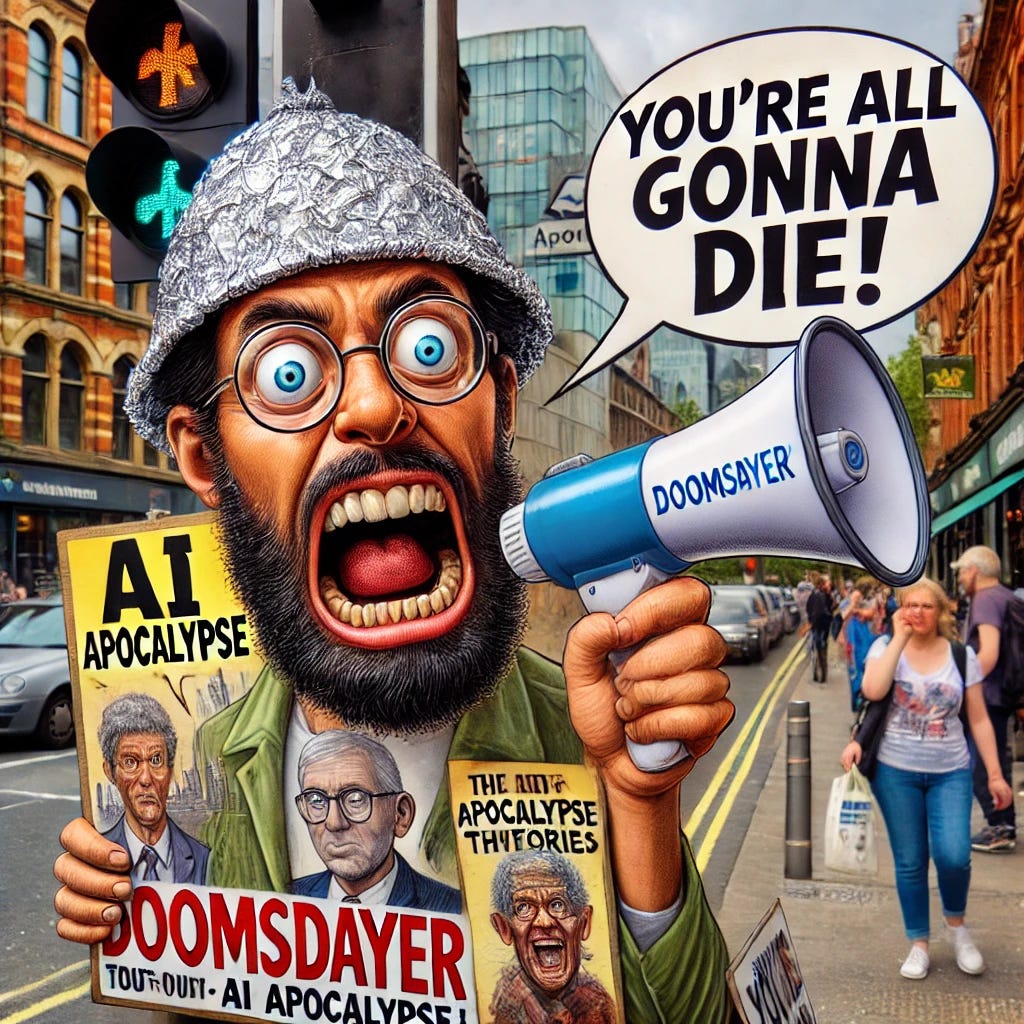

10. Doomsdayer

The Guy Who’s 100% Sure of What’s Going to Happen—and He’s Always Wrong.

Genetics: If Nostradamus married a Bitcoin miner and had a baby with a tinfoil hat.

You know this guy. He’s been predicting the end of the world, the next stock market crash, or the absolute rise of cryptocurrency (when it’s already tanking). The Future Predictor knows what’s going to happen because, obviously, he’s an expert. He spends hours doom scrolling, coming up with all the ways humanity is going to implode, and he just can’t wait to tell you all about it.

Species Marker: Follows every conspiracy theory and spouts out “data” like a chatbot gone rogue.

Extinction Status: They’ll be around forever, because they’re always almost right.

Example: The guy who’s been stockpiling canned beans for 20 years because the apocalypse is “right around the corner.”

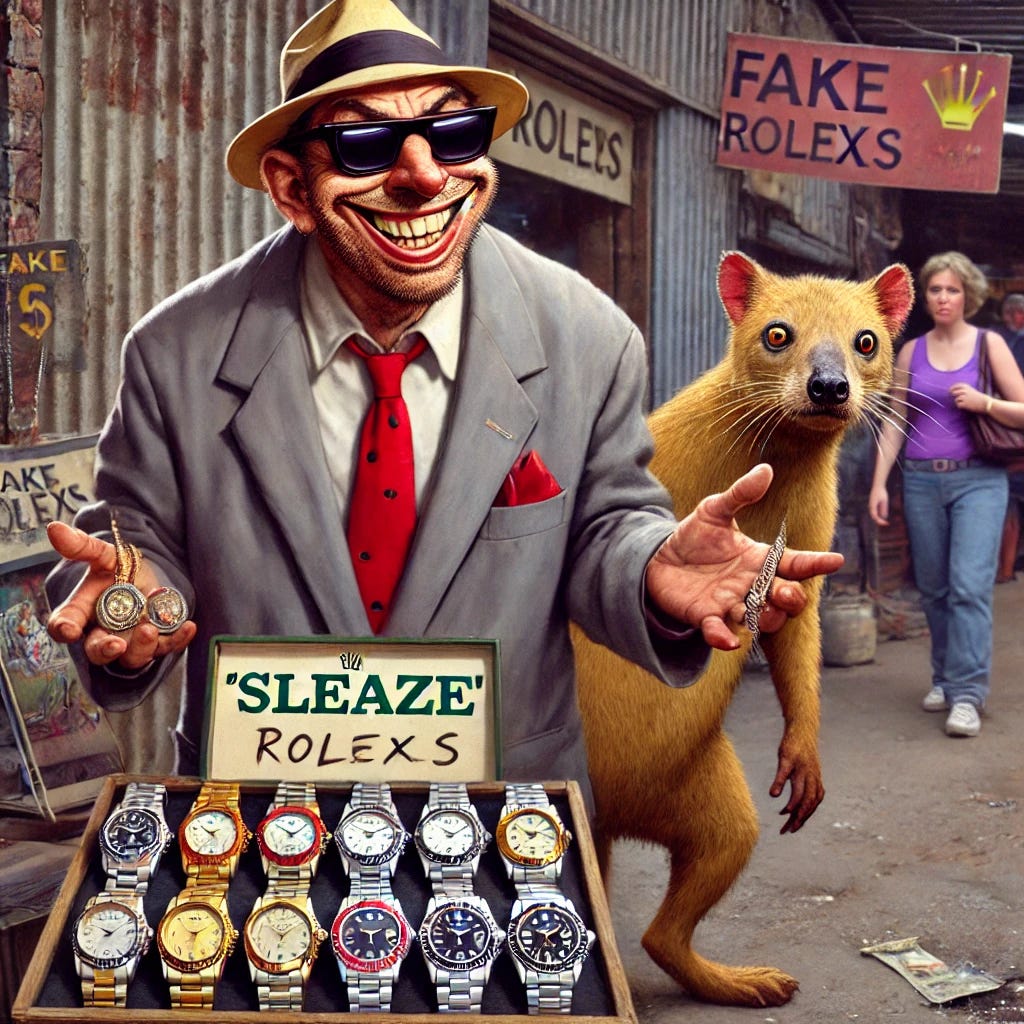

11. The Sleaze

The smooth-talker who’ll compliment your style while stealing your wallet.

Genetics: If Joey Tribbiani from Friends married Saul Goodman from Better Call Saul and had a baby who sells fake Rolexes.

Sleaze is the awkward lovechild of Joey’s “How you doin’?” charm and Saul Goodman’s shady schemes. Add in a dash of Creep and a sprinkle of Asshole, and you get a guy who can talk you into anything—usually something illegal. The Sleaze will compliment you while stealing your wallet.

Species Marker: The slicked-back hair and shifty eyes that immediately scream “trouble.”

Extinction Status: Immortal in shady industries, where they reinvent themselves with every scam.

Example: The guy who offers to “double your money” in a deal that’s definitely not legal.

How Pricks, Assholes, Jerks, and Douchebags Wiped Out Entire Species, and Doomsdayers Are Wiping Out Ours

Douchebag → Baiji Dolphin

The Douchebag Industrial Complex

The Baiji dolphin, once a graceful inhabitant of the Yangtze River, fell victim to the relentless industrialization of China’s booming economy. Factories lined the riverbanks, spewing pollution into the water, driven by the short-sighted ambitions of corporate douchebags more interested in quarterly profits than environmental consequences. The rapid development, spearheaded by these titans of obliviousness, decimated the Baiji’s habitat through unregulated shipping, damming, and toxic waste. By 2006, the Baiji was declared functionally extinct, a casualty of unchecked corporate greed and industrial recklessness.

Jerk → Carolina Parakeet

The Jerk Farmers’ Vendetta

Once a common sight across the eastern United States, the Carolina parakeet was a vibrant bird species known for its colorful feathers and noisy flocks. Unfortunately for the parakeets, they also had a taste for fruit crops, earning them the eternal ire of farmers—your classic jerks who couldn’t be bothered with humane deterrents. Rather than adapting to coexistence, these farmers took up arms, shooting the birds en masse as a “solution” to their parrot problem. The last known bird died in captivity in 1918. Thanks to these short-sighted jerks, an entire species was wiped out.

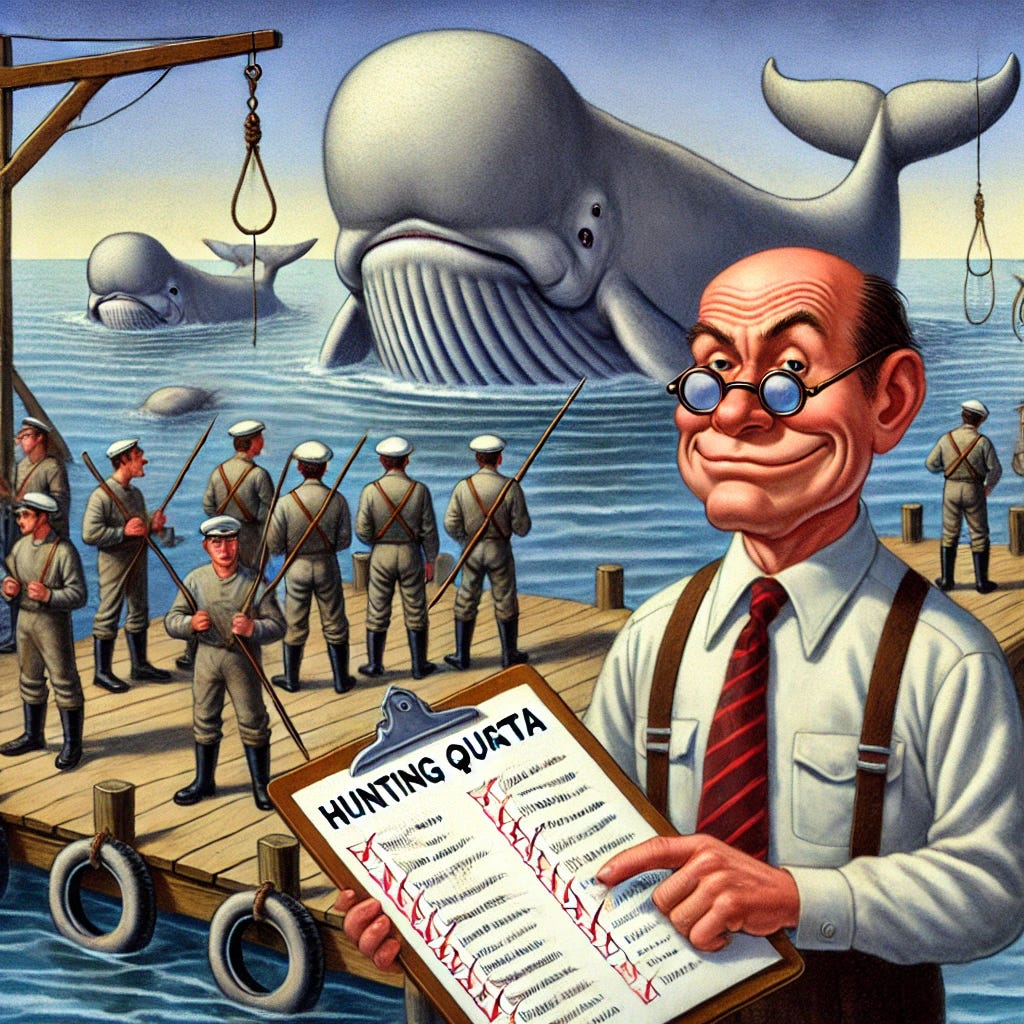

Prick → Passenger Pigeon

The Prick’s Sporting Event

The story of the passenger pigeon is one of staggering abundance, followed by a precipitous decline at the hands of the hunting elite. In the 19th century, these birds darkened the skies with their massive flocks—billions strong. But this abundance proved too tempting for wealthy pricks who viewed the passenger pigeon as little more than flying target practice. Organized pigeon hunts became a grotesque sport, with affluent pricks like General Leonard Wood leading the charge. By 1914, the last known passenger pigeon, Martha, died in the Cincinnati Zoo.

Asshole → Dodo

The Asshole Sailors

The dodo bird, native to the isolated island of Mauritius, had the unfortunate fate of meeting asshole sailors of the 17th century. These flightless birds had no natural predators, and their trusting nature made them easy targets for sailors who stopped on the island for food and sport. Not only did these assholes hunt the dodos to near extinction, but they also introduced invasive species like rats and pigs that decimated the dodo’s ecosystem. By 1681, the last dodo was dead.

Tool → Steller’s Sea Cow

The Tool’s Efficient Hunt

Steller’s sea cow, a massive marine mammal discovered in the icy waters of the North Pacific in the 18th century, was a gentle herbivore with no natural defenses. But when Russian explorers found them, these tools saw an opportunity for efficient resource exploitation. Driven by strict adherence to procedure and order, Russian hunters systematically slaughtered the sea cows for their meat and fat. In just 27 years, these animals were hunted to extinction.

Creep → Great Auk

The Creep’s Lurking Obsession

The Great Auk, a large flightless bird native to the North Atlantic, was hunted to extinction in the 19th century. These penguin-like creatures were once plentiful, but as they became rarer, they attracted the attention of collectors—creeps obsessed with owning a piece of something rare. Icelandic fishermen, driven by the demands of these social predators, hunted the last breeding pair in 1844. The Great Auk’s extinction shows how creeps, with their invasive tendencies, often cause irreparable damage.

Sleaze → Tasmanian Tiger (Thylacine)

The Sleazy Bounty Hunter

The Tasmanian tiger, or thylacine, was once the apex predator of Tasmania’s rugged landscape. However, by the early 20th century, a campaign of government-sponsored bounties turned sleazy bounty hunters against them. These opportunists, driven by easy money, exaggerated the thylacine’s threat to livestock, killing them by the hundreds. By 1936, the last known thylacine died in captivity, a victim of greed and misinformation.

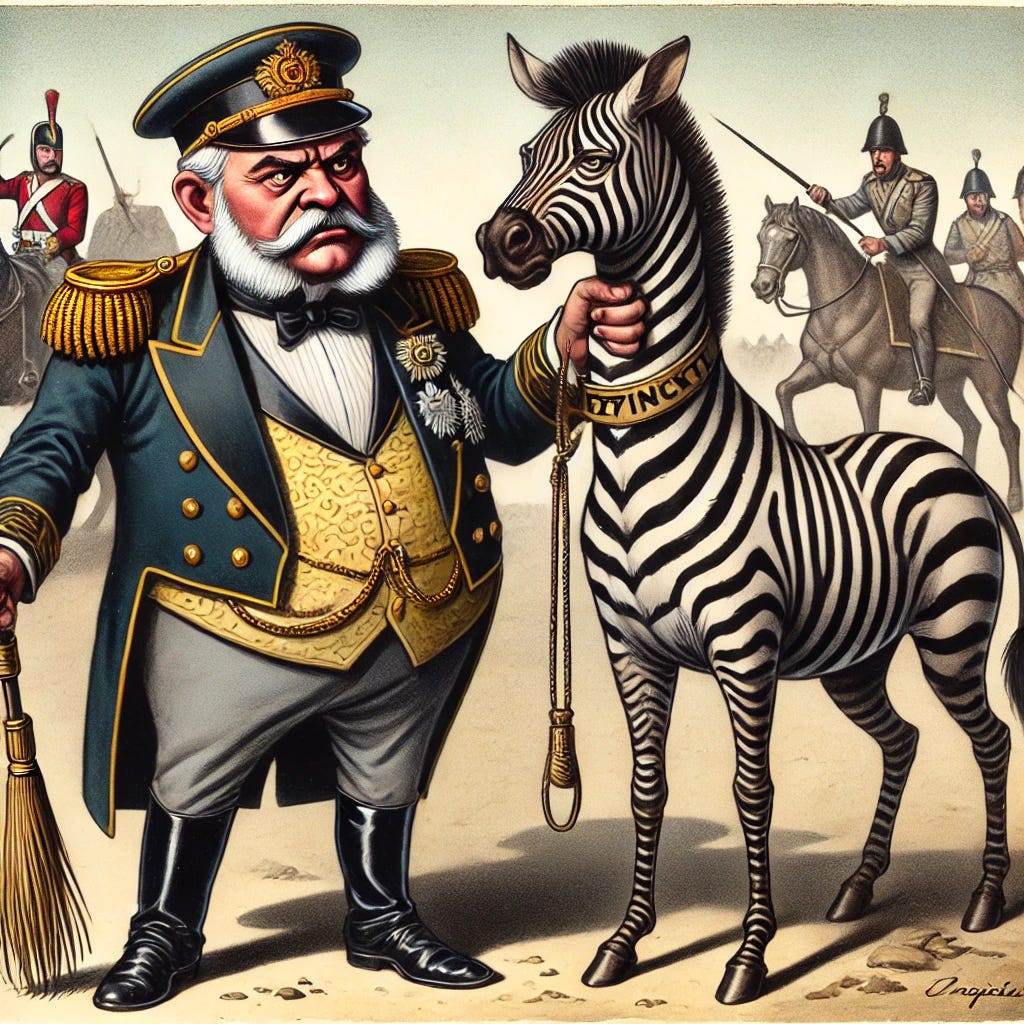

Tyrant → Quagga

The Tyrant’s Colonial Ambition

The quagga, a striking half-zebra, half-horse creature native to South Africa, fell victim to British colonial settlers of the 19th century. These tyrants, viewing the quagga as competition for grazing land, hunted the animals ruthlessly to clear the way for farming. Driven by their desire to control and dominate the environment, they obliterated quagga populations. The last known quagga died in a zoo in 1883.

Doomsdayer → Homo sapiens sapiens

The Doomsdayer’s Real Legacy: Undermining Human Potential

Doomsdayers appear to be an outlier in this taxonomy, as they pose a threat not to another species, but to themselves—which is to say, the rest of us. Purveyors of gloom with their obsession over hypothetical futures—particularly the idea of AI overthrowing humanity—are doing more harm than any asteroid could ever dream of. Here’s why: by consistently downplaying human intelligence and ingenuity, Doomsdayers set dangerously low standards for what we can achieve, all while incentivizing themselves to find evidence that we’re failing to meet even those. Nothing says “bye, species!” quite like the constant drone of “your computer is smarter than you, and probably plotting your demise.” It’s as if they’re trying to manifest a future where we’re collectively outwitted by a glorified calculator.

Further, Doomsdayers are uniquely disposed to ignore actual, present-day threats while loudly nail-biting about speculative future outcomes. Their worldview mixes magical thinking with a statistically overconfident flair (think of them as doomsday prophets with spreadsheets). While they sound the alarm about AI takeovers or climate catastrophes, they distract us from real, immediate dangers that could genuinely end civilization: superbugs capable of sparking pandemics, cyberattacks on critical infrastructure, the erosion of democracy through misinformation, nuclear conflict, and Taylor Swift. These threats aren’t theoretical—they’re happening right now. Yet, Doomsdayers are too engrossed in their dystopian fantasies to notice.

In the end, the real extinction event won’t come from a cosmic accident—it’ll come from Doomsdayers convincing us we’re not smart enough to survive.

Conclusion: A Species on the Brink of Its Own Making

After investigating these human subtypes and their catastrophic impact, results show that the extinction of species—whether it’s the Baiji dolphin, passenger pigeon, or the humble quagga—can be traced back to a potent combination of greed, arrogance, and pure cluelessness. Perhaps most unsettling is my finding that we may be the first species on Earth to bring about our own extinction—not through some external disaster, but through our own behavior.

From douchebags prioritizing profits over ecosystems to doomsdayers distracting us with apocalyptic fantasies of AI overthrowing humanity, the greatest threat to our survival might just be... ourselves. Whether it's thinking we’re too smart for humility or constantly beating ourselves up as yesterday’s news, we seem bent on speeding toward oblivion. And it's not just AI. Between chasing the next tech miracle, ignoring the real threats already at our doorstep, and treating every trend as a crisis, we’re perfecting the art of distraction just when we need clarity most. The real danger may not be that machines are becoming too intelligent, but that we’re losing sight of our own intelligence—and with it, the will to survive.

Erik J. Larson

You could add two more vital types to this typology:

The Poseur puts up a veneer of sophistication on any given topic and has many ready-at-hand opinions on it, but, when pressed to engage in a deeper way on the topic, has only a very superficial understanding of it.

The Crank is like the Poseur, but instead of projecting sophistication, he insists on his originality and has spent lots of time thinking about some specific, narrow theory; unfortunately, the Crank lacks the talent and the critical thinking skills to actually have original ideas and connect them to anything in an established body of knowledge. He becomes bitter and dissociated from reality.

Maybe for the Poseur you could include the "species" of raccoons on certain Caribbean islands that are just common raccoons in an unusual niche:

https://en.wikipedia.org/wiki/Island_raccoon

For the Crank, you have to pick an extinct species that never exist in the first place, like the "Hunter Island penguin":

https://www.smithsonianmag.com/smart-news/extinct-penguin-actually-never-existed-first-place-180964556/

We would not be the first species to bring about our own extinction, for starters: in fact, given evolution, we have wiped out most of our previous iterations(same can be said of a lot of other animals).

While quite chill for us, that had not been great for say, homo erectus. That said, I do not think that creating deepfake clones of human beings as ghosts in the machine - something entirely possible right now counts as anything like "descendant of humans" while killing our actual children.

The argument that existential risks exists does not mean that there arent other risks. That is like saying that bacteria that kills you doesnt also cause infection, bad smell and local organ failure first. Of course it does: but it is the death of the entire person that is pretty important to note.

The argument that "humans are just too clever to die" is a "hope" argument. Hope is not a strategy and the evidence is strongly on the negative side. Noble prize winners at this time have emphasized on it - but one does not need to appeal to authority, only evidence.

So yes, if we are to find a future, the answer is to acknowledge and deal with the risks of AI, with evidence and awareness of it.

I want denial to be right. I want the risks to be silly. But the evidence is what matters and dealing with reality is how we have to do in order to survive.

I am posting Yoshua Bengio's excellent reply to the mundane "dont worry, its all fine" arguments:

https://yoshuabengio.org/2024/07/09/reasoning-through-arguments-against-taking-ai-safety-seriously/