Foundational Technologies: Are LLMs the Next Stepping Stone?

Innovations build on each other. Are LLMs the final technology, or the beginning of something else?

Standing on the Shoulders of Giants?

Hi everyone,

The question I take up here is not what LLMs are, but how they sit in relation to other technologies, and in particular the ones that came before. The question arises: are they the beginning of something, or the end of something? Let’s dig in.

Foundational Technologies

I’ve been fascinated by innovation for most of my life, though, in truth, I’ve written more about it than done it—at least when it comes to commercial technology. My R&D record, including a patent application, is a bit more impressive. As readers here know, I’ve written extensively on 21st-century technology innovation, especially on big-picture items like the web and AI. Over time, you see trends come and go, but you also see what I call foundational technologies embed themselves into the societal infrastructure, where they will likely remain for a very long time.

Some obvious examples include telecommunications infrastructures, routers, and operating systems. Only slightly less obvious are technologies like HTML and the web, social networks, and data-driven online monetization. In the 21st century, AI has brought about a sea change. As I’ve pointed out many times before, in 2000, “Good Old-Fashioned AI” (GOFAI) shops like Doug Lenat’s Cycorp could still boast and largely bamboozle an uninformed public and media about the coming wonders of knowledge-based reasoning.

By 2005, however, no one in the know believed that path held any promise for AI. GOFAI survived, and to some extent still exists today, as a component within larger systems. It adds value in situations where purely empirical or data-driven methods fall short—when they are clunky, error-prone, or when a paucity of trainable data limits their effectiveness.

Keep It Coming

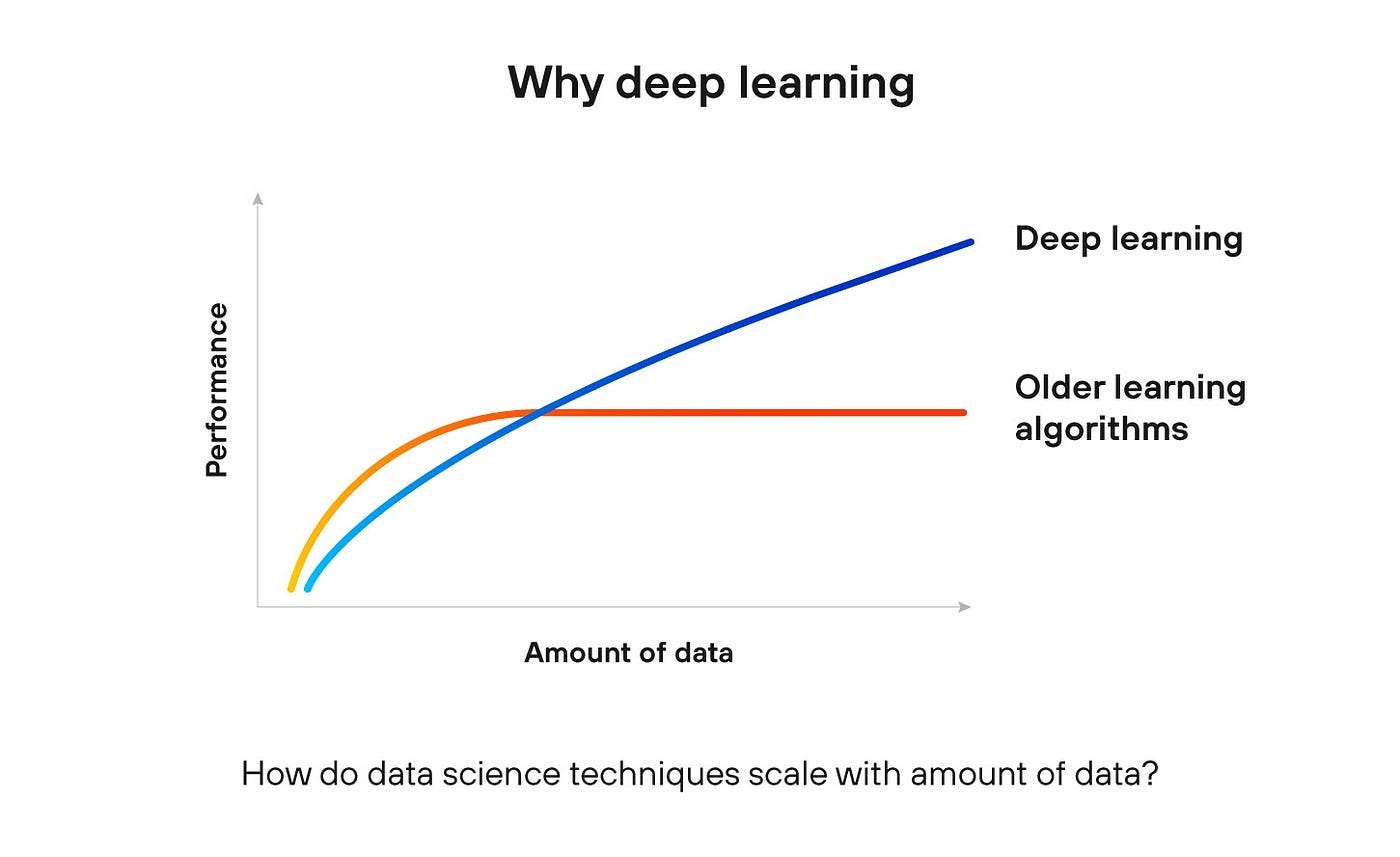

We all know by now that the latest wave of innovation is actually over a decade old. It began when deep neural networks (DNNs) made a splash in popular contests like ImageNet. A simple way to understand what happened with DNNs is that they extended traditional machine learning approaches, but thanks to their more complex, multi-layered (“deep”) architecture, they kept responding positively to increases in training data. While other models would plateau or see performance saturation, DNNs continued improving across a range of tasks: self-driving, facial and object recognition, speech-to-text, language translation, commercial applications like personalized recommendations, and more.

The web—and its ever-growing user base—kept feeding the machines with more trainable content in the form of text, images, sound, and video. The AI systems, in turn, crunched that data and spit out increasingly accurate results. The feedback loop was relentless and impressive. That was then.

Legendary Launch

Our most recent innovations are built directly on top of the deep neural network revolution, which also includes all the progress made with GPUs and specialized chip design. Foundational models—of which large language models (LLMs) are the flagship—owe their existence to several key shifts: (a) the move from GOFAI to machine learning in the 2000s, (b) the availability of orders of magnitude more trainable data, (c) the power of Moore’s Law, and (d) the DNN breakthroughs of the early 2010s.

Though foundational models were already in use before the now-legendary ChatGPT launch in November 2022—take BERT, for example, which I was using a year earlier—it wasn’t until that release that we saw the bizarre leap in performance. Before ChatGPT, no one was calling BERT an “alien intelligence.” That 2022 release marked a new layer of innovation, building directly atop the advances that came before it.

Attention is all you need

Pay Attention

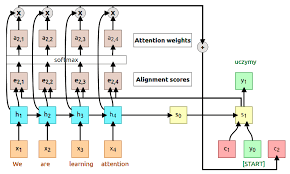

In a sense, the innovation laid out in the now-famous Google paper “Attention Is All You Need” is a natural consequence of continuing the trajectory of larger DNNs paired with faster GPUs. The key breakthrough of the "attention mechanism" lies in its ability to dispense with older architectures like sliding windows, which were used in models that had to process language sequentially. Instead, the attention mechanism can examine an entire sequence of tokens—like a full sentence—all at once. This elegantly addresses one of the long-standing problems in natural language processing (NLP): “long-range dependencies.” For example, in earlier models, when important context or meaning occurred across distant words in a sentence, it often got lost in translation.

Getting Lost in Translation Before ChatGPT

Consider this sentence:

"The man who ran the marathon in record time is a world champion."

In this sentence, the subject ("the man") and the predicate ("is a world champion") are far apart, with a relative clause ("who ran the marathon in record time") in between. A model needs to understand that "the man" is what "is a world champion," even though many tokens separate them. Earlier models, which processed sentences sequentially and only retained a small window of context, struggled to make such connections.

The attention mechanism, however, can "attend" to the entire sentence at once, allowing it to easily connect "the man" with "is a world champion," regardless of the distance between these words. This solves the problem of long-range dependencies, which tripped up older architectures.

This isn't quire the full story however. The brilliance of the attention mechanism lies in its design, where each token is assigned both a key and a value. The key helps determine the relevance of one token to another, while the value carries the actual information. This allows tokens to "announce" their role and importance in the sequence—"Hey, I’m a noun!"—and interact with other tokens dynamically. As a result, the model can focus on the most relevant parts of the input, an advance that all but eliminates the once thorny problem of long-range dependencies.

Care For Some Grapes (They’re Sour)

It’s interesting to see what’s happened. I’m often amazed that many of my peers in AI have turned on the technology, treating it as if it’s the worst development the field—especially the NLP subfield—has ever seen. Yet it’s quite likely the first time in AI history that everyone, from your mom to the President of the United States, actually noticed that AI worked. What is progress, exactly, if it's not well, making progress?

With GPT models, we see upfront the enormous performance gains—over, say, earlier systems like Alexa or Cortana—in conversational AI. But the behind the scenes tasks in NLP saw huge performance jumps too. Co-reference resolution, for example: where a model needs to understand that “he” refers to “John” mentioned earlier in the text. Or named entity recognition (NER), where identifying that “Apple” refers to a company and not a fruit might stump older models. And sentiment analysis, determining if a text—say, a movie review—expresses positive or negative feelings, got a major upgrade with LLMs. The list goes on.

Even if we only consider LLMs through a technical lens, they would still qualify as a monumental innovation, with the attention mechanism being not just hugely effective, but also quite technically clever. If early AI systems were like the Model T of computing, LLMs might be the Tesla Model S—and deep neural networks would sit somewhere in between.

But if we also consider the impact of the technology on the field of AI and its dissemination beyond the field to the world at large, LLMs are clearly a significant leap. They've captured the attention of not only researchers but also industries, governments, and the general public. The widespread use of AI-powered tools in applications like customer service, content creation, and even policymaking makes it clear that LLMs have transcended their original technical scope and embedded themselves into everyday life.

The Third Way (Or, Focus on Innovation, Not Debate)

Foundational technologies build upon each other like layers in a structure, where each innovation supports the next. At the base, you have essential infrastructure like telecommunications and computing advancements, which enable faster processing and data handling. On top of that, technologies like deep neural networks and advancements in GPUs provide the computational power and architectural breakthroughs that drive modern AI. Finally, at the highest layer, we have foundational models like LLMs, which arguably represent the culmination of these prior advancements, drawing on everything below to perform complex tasks like natural language understanding and generation.

This brings me to my main question: are LLMs themselves a budding foundational technology that will spur further innovation (beyond just more LLMs)? I hear little conversation about this today. Most discussions fall into two camps: critics who view LLMs as a disaster for culture and a blemish on AI, and boosters who praise them as if AI didn’t exist until two years ago, and now we’re just waiting for AGI with each upcoming version.

There’s a third group, though—people like me—who acknowledge the enormous leap forward our field took with the advent of these models, but also acutely recognize the problems. Some of these issues are quite trenchant and worrisome, like hallucinations and data quality and availability. And of course, there are many more concerns, many of which are non-technical. This third group wants to call attention to these problems as a warning to those jumping in with both feet. We’re also interested in connecting with researchers and others who dream of more powerful and useful technologies. Today, I see plenty of discussion addressing the former, but much less on the latter. The conversation has become polarized, and I fear that the public is increasingly either worried, ecstatic, or simply overwhelmed.

One way out of this is to ask better questions. The best one I can think of is relational: how do LLMs, or generative AI broadly, relate to other technologies? Are they a new foundational technology? Their value lies not in being the end of the innovation road, but an enormous stepping stone. They reveal the problems with data-driven approaches, the limits of inductive reasoning, and the challenge of conceptualizing thought as data. They are a boon to engineering, integrating high-quality language processing into systems. Engineers work with errors to design systems that mitigate them, while scientists see errors as evidence of faulty ideas. How should we approach this divisive and powerful technology? As an AI guy, I see them as flawed, but fruitful for future innovation.

Ten years ago, it would’ve been hard to evaluate what a large model trained on massive data would actually do. Would it “come alive”? Would it still be clunky and stupid like earlier systems? We now have amazing data on failure points and hallucinations—data we can use to innovate further. Yet much of the conversation remains tone-deaf. The web has, once again, polarized us into arguing instead of thinking.

I find it far more likely that LLMs will lead to new avenues of innovation, rather than being banned or rocketing us to AGI. We’ve learned a lot about artificial intelligence—and possibly our own. I’m inclined to see LLMs as foundational technologies. And I wonder what will come next.

Thank you for this piece, Erik! What came to my mind when reading it was your earlier article https://open.substack.com/pub/erikjlarson/p/ai-is-broken?r=32d6p4&utm_medium=ios, specifically its part “ChatGPT is Cool. Sort Of.” Didn’t that contradict your today’s post? Or is the context different and I misunderstood? 🙏🏻

I am skeptical. There was a demo circulating a while ago about (iirc) an LLM inspired attention model applied to computer vision, specifically for a driverless car, that showed improved object and path detection that I thought was impressive.

But generally they seem to be a solution in search of a problem. In art and literature they miss the entire point, which is humans conveying meaning to other humans. Without a *mind*, a person experiencing the world, having thoughts about it, and attempting to convey those thoughts to others, generation of images and text is utterly meaningless.

And as sources of truth they are the worst kinds of misinformers, in that not only are they often wrong (like people), but it is impossible to intuit what they are likely to be right about and what they aren't, which makes them exceptionally dangerous. To the extent that these anti-features propagate to other applications of the underlying technology it is worthless at best and dangerous in general.

Are there pieces of tech under the hood, or general conceptual innovations, with any practical value? I'm sure there's something in there that's valuable.

I'd guess we find some application for quantized tensor operations besides generating textual and visual nonsense, and if we do all those TPUs will finally be more than a waste of electricity.

We'll probably find some use for stable diffusion inspired photoshop filters once we get past the lens flare phase. And I've personally had some success in using small local LLMs for creative writing assistance, though not to generate the text itself (rather, as a sounding board and worldbuilding assistant, which I've built a simple client around).

I envision some applications in interactive entertainment, to flesh out background characters and the like, similar to how we use other forms of procgen in games and interactive art.

But to your main question, is this actually moving us forward in a meaningful way, it's hard to say. Forward toward what? What do we envision AI actually doing for us? What is it good for, beyond giving us nerds something to be excited about? The answer seems to mainly be driving the marginal cost of labor toward zero. Which causes the neolib managerial class to drool by the bucket but sounds apocalyptic to me. And it's unclear at this point whether machine learning is even doing that, or just automating crap that nobody should have ever been asked to do in the first place, the negative externalities and internalities of managerialism (boilerplate code, paper pushing, the "email job"). Is that what we need AI for? Then yes, LLMs are a huge leap forward.