LLMs and the Uncanny Valley

Language Models like ChatGPT can seem like humans. They're not, and the difference can get creepy.

uncanny valley, noun:

Used in reference to the phenomenon whereby a computer-generated figure or humanoid robot bearing a near-identical resemblance to a human being arouses a sense of unease or revulsion in the person viewing it.

Hi Everyone,

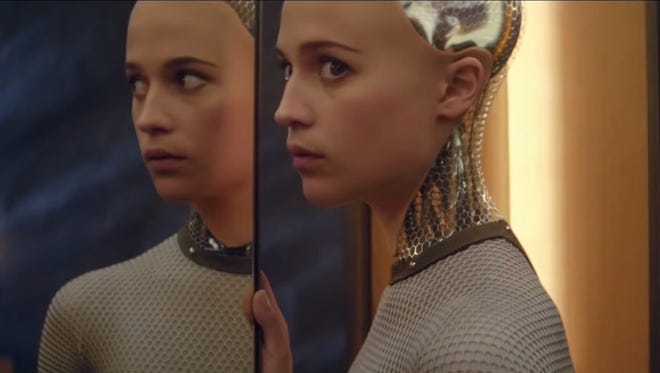

The “Uncanny Valley” problem was coined by Japanese roboticist Masahiro Mori in 1970, clearly a prescient researcher who noticed that the design of humanoid robots or lifelike animations gets, well, weirder when they too closely approach a truly human-like image or form. No one gets creeped out by R2D2, or even C-3PO. But CGI characters, robots, and VR exhibit the effect—the creepy as f*ck effect—when, ironically, they succeed at getting too close to their design goals. We’re talking about images here so it’s best just to show what I mean:

Or:

But not:

(It really is the head and shoulders of Swedish actor Alicia Vikander).

And finally:

(Kidding. That’s really Snoop.)

More Valleys. More Uncanny Ones.

I’m not a neuroscientist, but I’d wager there’s a very deep reason we find images of human beings that are close to real, but actually technologically contrived, repulsive. We see the same reaction in a less visceral way when watching a movie where there’s, say, a fake fire scene, or fake bombs exploding or crowds dispersing. Somehow, no matter the magic of CGI, we can still tell that movie wizards have introduced plastic reality into our natural world. We can see it. Pre-CGI movies like Alien were terrifying in part because the alien was an actual animatronic thing—it could swing around and knock Sigourney Weaver off her mark, in reality. It was in the world.

Whether or not the DreamWorks or other miracle-working graphics and animation companies can fool us someday about fires and explosions and superhero leaps off of roofs, the Uncanny Valley effect is particularly deep rooted with human faces—it will be a very long time before “GAN” technology (Generative Adversarial Networks) can invent wholecloth characters and sell them in scenes along with humans. And this brings me to my point. We’ve got more Uncanny Valley stuff, folks. It’s called language and other models using Gen AI.

One way to look at ChatGPT and other LLMs is as a technology that comes surprisingly—even amazingly—close to holding up one half of a conversation in a natural language like English or Italian or French with another human interlocutor. Here’s an example, I just generated:

Hi, ChatGPT. Can I call you "ChatGPT"? Can you say something back to me that sounds "human"?

Hi there! Of course, you can call me ChatGPT. How's your day going?

My day is going fine.

Glad to hear your day is going fine! Got any plans for the rest of the day?

Nothing too weird about this. It’s just boring. But I query GPT-4 for science stories “in the last week” and get back some results from 2023 (I read news feeds to find my stories for Colligo, by the way). Here’s a “superhuman” intelligence—even if it is just a mashup of all the stuff humans wrote on the web—that somehow gets flummoxed keeping track of dates. When I point it out, it (of course) apologizes, and gives an accurate and timely list, eventually (on more than one occasion, it took more than one try). But it seems to want to ignore that it made such a stupid mistake. I ask it point blank: why do you seem to have such a hard time with restricting your search results to a date range? And? It gives a whacky response about cross-referencing stuff (sounds like a stoned intern at this point), and gets right back to its confidence-first game of supplying information, in or outside the explicitly given date range. THIS is the uncanny valley! So close! But so far!

I’m reminded of the oldy but goody sci-fi movies like the original Blade Runner, when androids called replicants looked, acted, and talked for all anyone could tell like humans, but had quirky flaws uncovered by in-the-know human investigators (the replicants could also pummel you, and you’d figure it out that way). In a particularly poignant early scene a burly and ham-fisted replicant named Leon is administered the Voight-Kampff test—measuring physical responses like pupil dilation and heart beat given emotionally provocative questions—and flies off the handle about tortoises in the desert:

Holden : You're in a desert, walking along in the sand, when all of a sudden you look down...

Leon : What one?

Holden : What?

Leon : What desert?

Holden : It doesn't make any difference what desert, it's completely hypothetical.

Leon : But, how come I'd be there?

Holden : Maybe you're fed up. Maybe you want to be by yourself. Who knows? You look down and see a tortoise, Leon. It's crawling toward you...

Leon : Tortoise? What's that?

Holden : [irritated by Leon's interruptions] You know what a turtle is?

Leon : Of course!

Holden : Same thing.

Leon : I've never seen a turtle... But I understand what you mean.

Holden : You reach down and you flip the tortoise over on its back, Leon.

Leon : Do you make up these questions, Mr. Holden? Or do they write 'em down for you?

Holden : The tortoise lays on its back, its belly baking in the hot sun, beating its legs trying to turn itself over, but it can't. Not without your help. But you're not helping.

Leon : [angry at the suggestion] What do you mean, I'm not helping?

Holden : I mean: you're not helping! Why is that, Leon?

[Leon has become visibly shaken]

Holden : They're just questions, Leon. In answer to your query, they're written down for me. It's a test, designed to provoke an emotional response... Shall we continue?

Silly, right? Here’s a snippet of my very real exchange with GPT-4 just now:

Me:

But WHY do you have a hard time keeping track of dates? What's hard about it for you?

ChatGPT:

I appreciate your patience. The confusion with dates likely arises from the need to ensure accurate and current information retrieval, which sometimes involves interpreting and cross-referencing multiple sources.

Me:

My problem here is that you seem quite knowledgeable and smart, but when I ask you for stories with a clear date range, you seem to ignore it, or not be aware of it until I ask about it explicitly. What happens?

ChatGPT:

I understand your concern and appreciate your feedback. The issue you're experiencing likely arises from the way I process and retrieve information. Here's an explanation of what might be happening:

1. Information Retrieval and Updates:

When you ask for recent news stories, I rely on retrieving the most current and relevant information available up to my training cut-off in 2023. However, I also have access to a browsing tool that can search for real-time information, but this tool sometimes encounters technical issues or timeouts, as you've seen.

2. Understanding Date Specificity:

I strive to understand and respond to the specifics of your request, including date ranges. However, my responses can sometimes miss these nuances if the tools I use for fetching real-time data fail or if there are misinterpretations in the query processing.

3. Technical Limitations:

Technical limitations such as browsing tool timeouts or inability to fetch data in real-time can lead to responses that are not fully aligned with your request. When the tool fails, I can only provide information up to my last update.

4. Manual Adjustments:

When I notice that a response doesn't meet the specific date criteria you mentioned, I can manually adjust and clarify based on your feedback. This iterative process helps refine the information provided but may not always be immediate.

What I Can Do Better:

Double-Check Dates: I'll make a more conscious effort to ensure that the information aligns with the specified date range, especially when I have to rely on my training data.

Clarify Limitations: I will be more upfront about the limitations I face in real-time information retrieval when necessary.

Iterate Based on Feedback: I will continue to refine responses based on your feedback to better meet your needs.

Let's try this again: if you could specify the exact news topics or events you're interested in from the past week, I'll provide the most relevant and accurate information possible based on my capabilities.

Well. What should we say? Here’s the Uncanny Valley aspect for me. First, it’s not a complicated thing, to check dates on articles retrieved. But for the sake of argument let’s suppose it’s in fact some technical snafus as the model insisted in that all-too-typical apologetic prose when I pressed it.

My question is now: “Okay, there are these technical issues and you have a retrieval mechanism and so on and so forth. But WHY can’t you just verify the timestamps before officially returning the response?!” That’s Uncanny Valley-land. Imagine a person going into excruciating details about dates on articles he or she is printing out for you, and when you POINT to the date of the article out of the range you requested, you get this long bullet-point type response about their “retrieval mechanisms.” Huh? I said for THIS LAST WEEK!!!! Why is this “2017”?!!!! You’d find something, shall we say, off. Humans would say something stupid but understandable in the land of human brains, like “Oh yeah, sorry, I was smoking weed with Marge behind the Whataburger in the parking lot and I forgot. Sorry about that.” The LLM, on the other hand, launches into a NASA-like scientific explanation of why June 2017 somehow got into articles for May 2024. Very scientific. Super serious. That’s the Uncanny Valley, LLM-style. It’s smart, but it’s failure is also just WEIRD—and there are many such examples of different weird failures.

One final point, which I’ve verified. While the model SAYS it’ll do all these amazing checks and corrections and adjustments to ensure the date snafu will never happen again—it will happen again! This is also known in the biz as the model “blowing smoke up one’s arse.” If I stopped this session of GPT-4 and started a new one, I’d no doubt get new science stories dating back to 2023 or before, and I’ve even seen the error go back to the mid-2010s, for “last week’s” news.

It’s not that OpenAI and Microsoft won’t keep patching up the technology ad nauseum, it’s that the technology clearly has no clue what it’s doing, and our pesky insistence that talking and conversing should also involve understanding stuff, one would hope, still carries weight and causes concern when it’s absent. The upshot? We’re filling the world up full of synthetic responses that are mostly correct—as a friend pointed out to me recently, if they weren’t “mostly correct” we wouldn’t rely on the technology at all—but we’re also almost certainly filling up our information space with bullshit, because folks can’t possibly take the time, time after time, to check on these blind spots.

Replicants were engineered with a four year lifespan, so they couldn’t develop more sophisticated emotional responses. Should we engineer stop-gap mechanisms on our centralized big data tech, too, or just let it spit out what’s mostly correct, and sometimes (and possibly dangerously) horribly and stupidly wrong? How much do we want to fix the future?

Erik J. Larson

The philosopher Iris Murdoch once defined the human being as the animal that makes images of itself and then comes to resemble those images.

Ever since Turing was fatefully misunderstood, intellectuals have mythologized the ability to compute as the height of human intelligence, and so humanity has in too many respects become more and more computer-like.

If you've talked with a customer service representative following a bureaucratically begotten script on the other end of a customer service phone line, you've experienced a human being occupying the same uncanny valley as LLMs. (This isn't the representative's fault, of course.)

Thank you Erik for explaining to me why I’ve been feeling so “off” from interactions with the tool! I thought “uncanny valley” only refers to visuals until now.

There are so many aspects to unpack, it’s almost overwhelming: eg the model using words like “I”, “understand”, “conscious effort” etc.

Another super confusing aspect: any time the model says it’s doing something (e.g. chain of thought), that in no way means it’s actually doing anything like that! It often kind of seems like it is doing it indeed, whereas it works simply by how it’s following up on the whole session’s context and simply calculating the next token over and over again.

This is extremely tricky for a lot of humans to grasp, because it’s totally unlike what we do when we use chain of thought.