Stuck On a Hill

An early reflection on the limits of induction and generalization in machine learning.

I wrote this in late 2008, thinking through why no statistical learner can ever quite model the world it’s meant to predict.

Friday, December 12, 2008

Stuck on a Hill

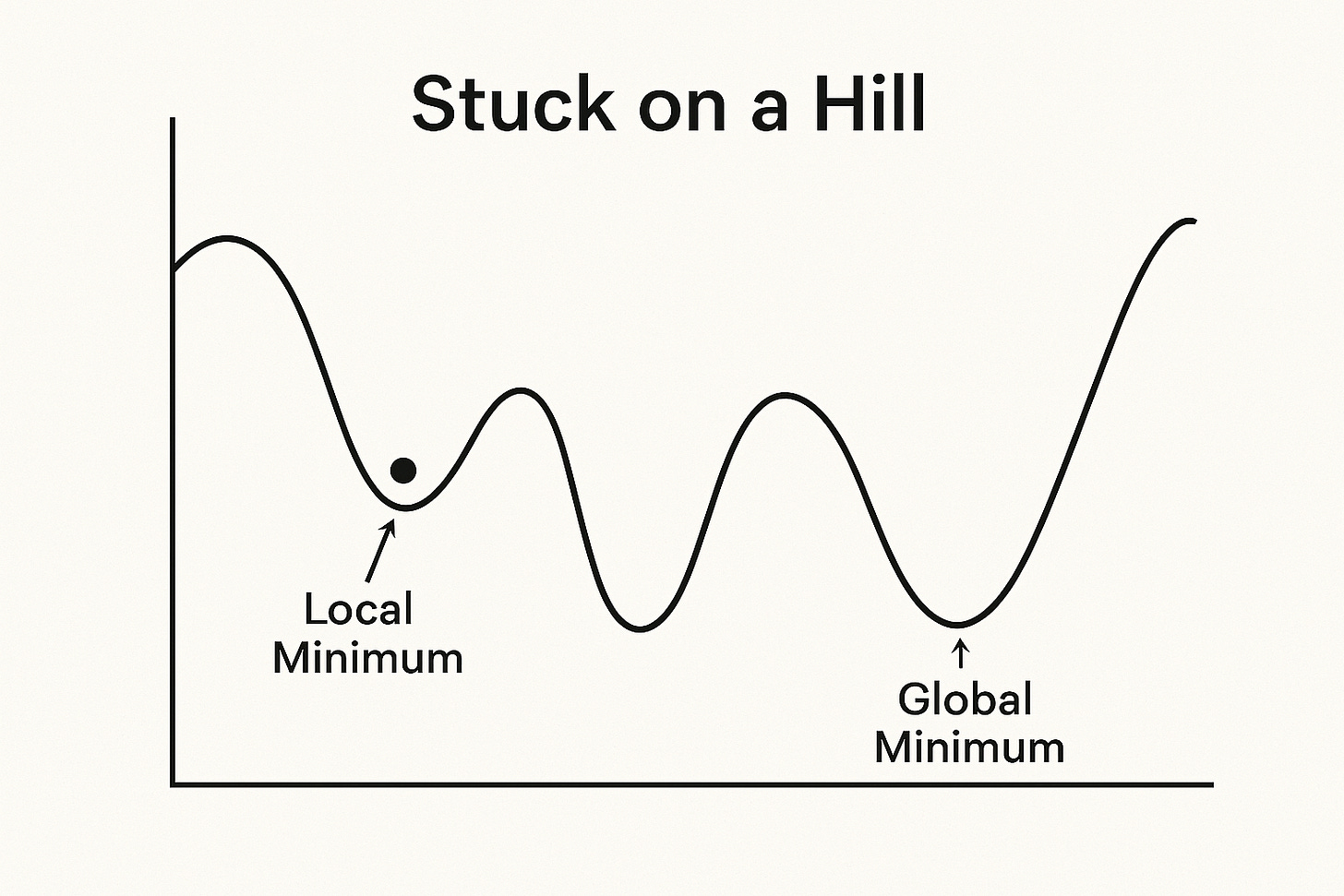

In artificial neural network (ANN) research, there’s a well known problem of local minima (or maxima). I’ve worked a bit with ANNs but much more with a (superior) learning algorithm, Support Vector Machines (SVMs). Unlike the latter, ANNs require heuristics to “converge” on an optimal solution given some very large decision surface. The heuristics, to simplify a bit, are intended to get the algorithm to converge on a global, not local, solution. Local solutions are unwanted because they can appear to be global (good) solutions, given some snapshot of the decision surface, but in fact are very bad solutions when one “zooms out” to see the larger picture. This phenomenon is perhaps best illustrated with geographical imagery.

Keep reading with a 7-day free trial

Subscribe to Colligo to keep reading this post and get 7 days of free access to the full post archives.